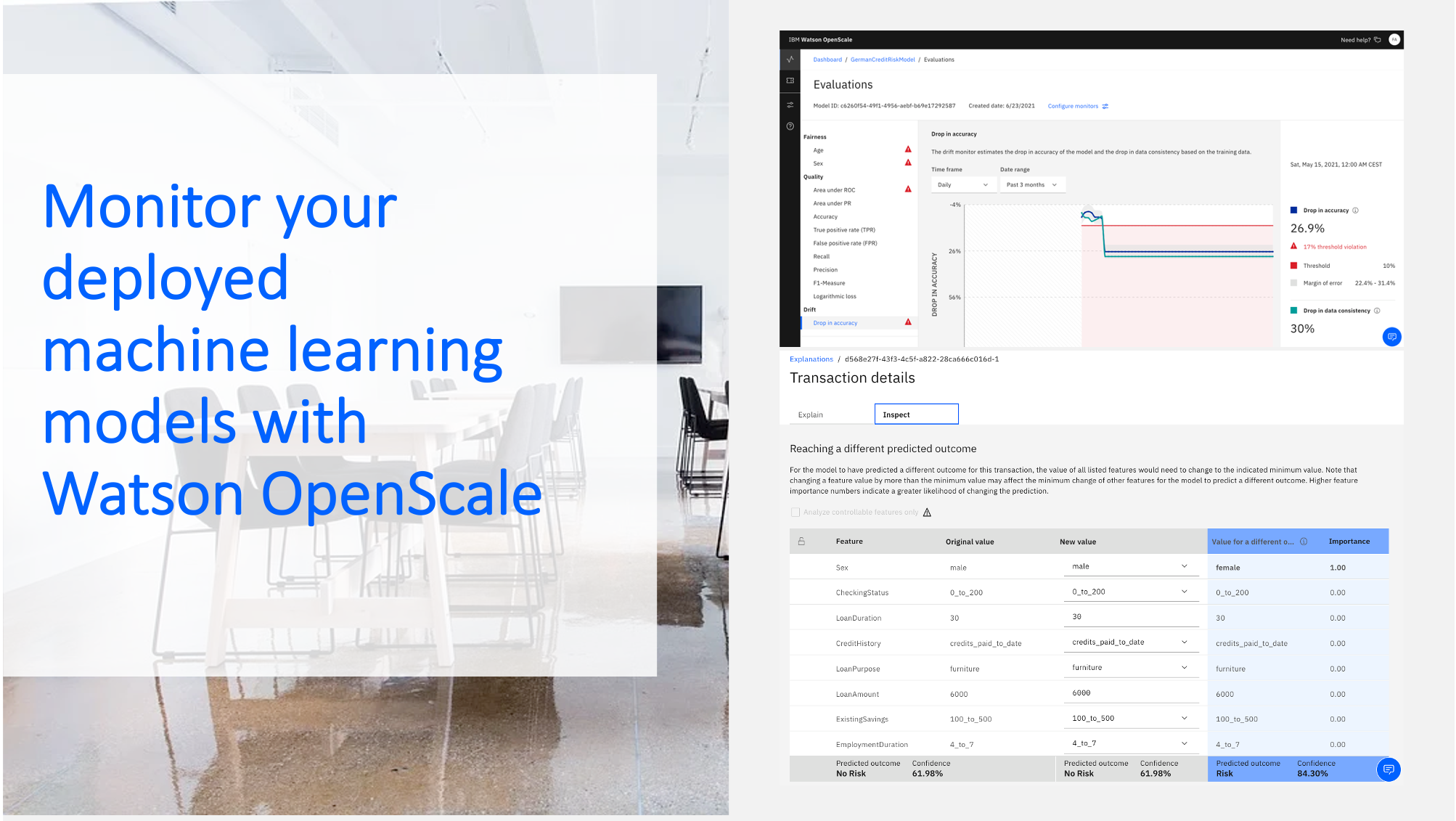

In this hands-on tutorial you will learn how Watson OpenScale can be used to monitor your deployed machine learning models

If you don’t have one already, please Sign up for an IBM Cloud account.

This tutorial consists of 6 parts, you can start with part I or any other part, however, the necessary environment is set up in part I.

Part I – data visualization, preparation, and transformation

Part II – build and evaluate machine learning models by using AutoAI

Part III – graphically build and evaluate machine learning models by using SPSS Modeler flow

Part IV – set up and run Jupyter Notebooks to develop a machine learning model

Part V – deploy a local Python app to test your model

Part VI – monitor your model with OpenScale

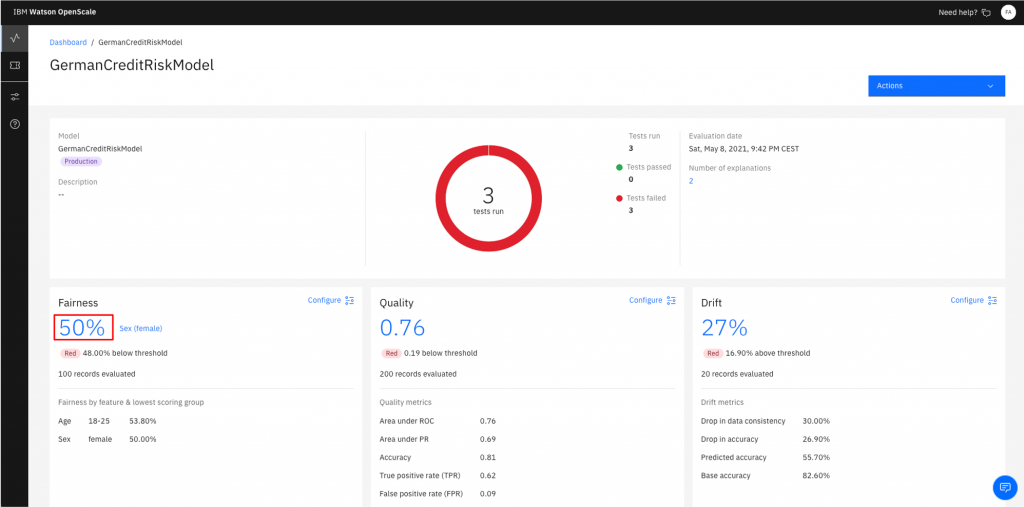

Deployed models can be biased or become less accurate over time, making precise predictions difficult. In order to trust machine learning models and artificial intelligence, deployed models need to be monitored. This is where Watson OpenScale comes into play, because it helps us with 3 kind of monitors:

- Fairness monitor – looks for biased outcomes from your model. If there is a fairness issue, a warning icon appears.

- Quality monitor – determines how well your model predicts outcomes. When quality monitoring is enabled, it generates a set of metrics every hour by default.

- Drift monitor – determines if the data the model is processing is causing a drop in accuracy over time.

To access the complete tutorial go to this GitHub Repository.